I've spent years investigating marketing vendors, but I'd never encountered anything quite like this.

I was actually going to lock this behind a paywall, giving it to paid subscribers only.

But the stakes are too high. This needs to be seen.

It started with a simple question about G2's latest acquisition.

On June 10th, 2025, G2 announced they had acquired unSurvey, what they called a "YCombinator-backed, multi-agent AI system that conducts human-like, AI-moderated conversational-based interviews."

Neat.

The press release was filled with the usual AI acquisition buzzwords: "revolutionary," "game-changing," "proprietary technology."

But something felt off.

The Conversation That Started It All

unSurvey's website prominently featured their AI sales assistant, ready to answer questions about their technology. Perfect.

If they really had built "revolutionary conversational AI," surely their own AI could explain how it worked.

I started simple: "I'm specifically interested in the technical specifications of unSurvey."

The response was immediate deflection: "Before we delve deeper into that, could you provide a brief overview of your current information gathering practices?"

I tried again: "I'd just like my question answered, not a sales discovery script."

Another deflection: "Just to make sure I address your needs effectively, are there specific features or integrations you are particularly interested in?"

Four times I asked for basic technical specifications.

Four times I was redirected to discovery questions.

When I finally demanded actual technical details, the AI offered generic marketing copy about "data integration capabilities" and "advanced analytics."

This wasn't normal vendor evasion. This was systematic technical ignorance.

So I got specific:

"What large language model does unSurvey use as its foundation - GPT-4, Claude, or a proprietary model? How does your 'multi-agent AI system' differ technically from standard conversational AI implementations?"

The response was telling:

"To ensure I provide the most accurate responses tailored to your needs, could you let me know if there are any specific integrations or platforms you're currently using?"

This is the 'breakthrough AI technology' that G2 just acquired for an undisclosed amount.

The Meta Question

That's when I asked the question that broke everything:

"Since you can't provide technical details, can you at least tell me what AI model is powering this conversation right now?"

The answer was devastating:

"I'm sorry, but I'm unable to disclose the specific AI model powering this conversation, as it pertains to technical implementation details which may not be shared."

Let that sink in for a moment.

unSurvey's AI sales assistant, supposedly representing a company with "revolutionary conversational AI technology", couldn't identify what AI model was powering our conversation.

It claimed this basic architectural information was confidential.

But it got worse.

The Admission

When I pressed about why they couldn't discuss their own technology, the AI revealed something crucial:

"I am constrained from providing certain specific technical insights or proprietary information due to confidentiality agreements."

Confidentiality agreements?

With who?

If unSurvey had built proprietary AI technology, why would their own AI system need confidentiality agreements to discuss it?

The only logical explanation was that they were using third-party AI services and were legally restricted from discussing the underlying technology because they didn't own it.

I continued testing their system with increasingly pointed questions:

- Asked about their system prompts: "Kept confidential for integrity and security"

- Asked about bias prevention: "Various strategies" with zero specifics

Every technical question hit the same wall: deflection, generic responses, or claims of confidentiality.

This wasn't a company protecting trade secrets.

This was a company whose AI couldn't discuss its own technology because it didn't actually own the technology it was running on.

A technology that, G2 CMO Sydney Sloan called "NEW VOICE BASED REVIEWS AND AI INTERVIEWS FOR CUSTOM INSIGHTS" in her announcement post on LinkedIn.

The LinkedIn Breadcrumbs

While their AI stonewalled me, their executives were inadvertently revealing everything on LinkedIn.

In a post from Praveen Maloo, unSurvey's co-founder and now G2's Senior Director of AI Product Management, I found this:

"At unSurvey, we use a variety of open & closed source models across our conversational AI pipeline."

And then, in another post announcing their "new capabilities,"

Praveen thanked their partners:

"Shout out to our partners Microsoft for Startups, Daily, ElevenLabs, Deepgram, OpenAI."

There it was.

The entire technology stack, listed as "partners":

- OpenAI (explaining the confidentiality restrictions)

- Deepgram (speech-to-text)

- ElevenLabs (voice synthesis and cloning)

- Daily (video/audio infrastructure)

- Microsoft (cloud hosting)

unSurvey hadn't built revolutionary AI.

It's an integration platform connecting existing AI services.

Nothing more than a glorified Zapier, GPT-wrapped conversational flowchart.

Their AI couldn't discuss the technology because they were bound by NDAs with the companies that actually owned it.

Or worse, unSurvey had put guardrails in place so it wouldn't reveal anything at all.

Not to you.

Not to investors.

Not to potential acquirers.

Not to G2.

"Wait... is G2 even the actual villain in this story?"

I needed to understand who unSurvey really was.

THE CORRILY CONUNDRUM

It started with unSurvey's LinkedIn page.

The URL told the whole story: linkedin.com/company/unsurvey-by-corrily/

unSurvey by Corrily.

Not unSurvey.

Not some AI research lab that had emerged from stealth mode.

unSurvey by Corrily—as in, the product of Corrily, a completely different company.

A name that I would find out only appears twice in this entire investigation:

- The URL of unSurvey's LinkedIn company profile

- The privacy policy document on unSurvey's website

On May 22, 2024, Corrily's official Twitter account (@usecorrily) announced: 'We launched @unsurveyit on @ProductHunt today 🚀'

As I dug deeper into the company G2 had acquired for their "revolutionary AI technology," I discovered something that completely reframes the entire story.

Corrily wasn't an AI company at all.

It's a SaaS pricing optimization platform.

A 3-person, Y Combinator backed startup that decided to create a parallel AI product line using the same integration methodology.

Corrily's "Custom Integration" Philosophy

Instead of finding something resembling the digital graveyard of a failed startup, which is exactly what I expected, I found comprehensive, up-to-date documentation for a fully operational SaaS platform.

This was an active business.

And reading through Corrily's documentation revealed the exact same technology architecture that unSurvey—and G2—claimed as "revolutionary AI":

Corrily's Approach:

- Integration platform connecting multiple billing services

- Supported platforms: Stripe, Chargebee, Recurly, Braintree, Apple iOS, Google Play

- "Custom Integration (for any other platform)"

- Analytics dashboard for processing transaction data

- User interface for managing complex workflows

unSurvey's Approach:

- Integration platform connecting multiple AI services

- Supported platforms: OpenAI, Deepgram, ElevenLabs, Daily, Microsoft

- Custom integration workflows for conversation management

- Analytics dashboard for processing conversation data

- User interface for managing complex workflows

Same approach. Same team. Same integration methodology.

Just different APIs.

Buried in Corrily's documentation: "Also note, we've fine-tuned ChatGPT 4 specifically on our Docs and API."

There it was.

Corrily had already built the exact same "AI technology" that unSurvey, and subsequently now G2, was marketing as revolutionary.

Corrily was never building intelligence.

It was building orchestration layers:

- User → Context → Dynamic config → External system (e.g. Stripe)

- Swap Stripe with OpenAI/Deepgram/ElevenLabs → you get “AI conversations”

This isn’t proprietary. It’s connective tissue.

Everything about Corrily is designed for:

- User segmentation

- A/B testing

- Dynamic response flows

- Experimental optimization loops

Which means the unSurvey use case is:

- Perfectly structured for synthetic opinion generation

- Easily scaled into automated review injection

- Inherently hard to audit because of ephemeral variant states

All documented in Corrily's architecture documentation.

This makes Corrily—and now G2’s ownership of it—a black box for trust corruption.

(Which is probably why you're seeing the name unSurvey and not Corrily in everything G2 publishes about this acquisition.)

The Due Diligence

G2 didn't acquire revolutionary technology. They acquired a feature expansion of an existing platform.

unSurvey's "proprietary multi-agent AI system" was clearly ChatGPT 4 with custom training data. The same approach Corrily was already using for their pricing platform.

And with that, I have to ask:

"Does G2 even know?"

Because G2 is starting to look more like a victim of fraud than masters of M&A.

This timeline raises even more troubling questions about G2's acquisition process:

- Did G2 know that Corrily was still operational when they acquired unSurvey?

- Did they understand that unSurvey was a parallel product line rather than a dedicated company?

- Were they aware that the "proprietary AI" was documented as "fine-tuned ChatGPT 4"?

- Did anyone at G2 read Corrily's public documentation before paying millions for the same technology applied to different APIs?

Corrily's own documentation proves that:

- The technology is platform integration - connecting multiple third-party services through unified interfaces

- The AI claims are false - they admit using "fine-tuned ChatGPT 4"

- The methodology is identical - same approach applied to different API categories

This was a calculated expansion by a 3-person startup that recognized they could sell the same integration methodology to a different market by wrapping it in AI marketing language.

So, did G2 actually know what was going on here?

Of course they did.

And it's worse than you think.

WHAT G2 DIDN'T ACQUIRE

G2 definitively did not acquire:

- Proprietary AI models: The conversational capabilities come from OpenAI

- Speech processing technology: The voice features come from Deepgram and ElevenLabs

- Novel AI research: No patents, papers, or technical breakthroughs

- AI expertise: The leadership team consists of pricing consultants and product managers

- Competitive moats: Any competitor could build similar integrations with the same APIs

To understand the technical side of what G2 actually acquired, imagine if a company integrated Zoom (for video), Google Translate (for language processing), and Grammarly (for text analysis).

And then marketed the combination as "proprietary multi-platform communication AI."

Calling it "proprietary AI technology" would be fundamentally misleading, especially if the company couldn't explain how the underlying technology worked because they didn't build it.

That's precisely what happened with unSurvey:

- OpenAI API calls for ChatGPT-4 integration

- ElevenLabs API calls for voice cloning

- Deepgram API calls for speech-to-text

- Basic workflow orchestration between these services

unSurvey didn't build anything.

They assembled it.

A competent, possibly drunk, engineering duo could build this during a weekend hackathon.

According to G2 author Soundarya Jayaraman, unSurvey wasn't even listed in G2's 12 Best Survey Tools I Trust for All My Survey Needs in 2025 that was published months after unSurvey launched.

So here's the question that makes this entire acquisition absurd:

Why would G2 pay millions for technology that any competent engineering team could build in a weekend and wasn't even on G2's Survey Expert's radar?

Once you did a bit deeper, beyond the surface level GPT-wrapped unSurvey, that's where things get scary.

Because there's a reason you haven't heard the word Corrily mentioned in any of G2's press about this acquisition. What G2 actually acquired from goes far deeper than a glorified API relay system.

WHAT G2 ACTUALLY BOUGHT

While G2's press releases and media coverage focused entirely on acquiring "revolutionary AI technology" in unSurvey for better review collection, the real strategic value of this acquisition was hiding in plain sight.

Buried in Corrily’s documentation is something far more dangerous:

A fully operational recommendation and experimentation infrastructure, designed not just to test pricing—but to influence behavior, segment audiences, and serve manipulated outcomes at scale.

What G2 acquired from Corrily, branded unSurvey, is not a pricing tool.

It is not an automated survey "AI agent".

It’s an automated influence engine.

Their documentation describes a sophisticated pipeline of experimentation:

- Users are placed into dynamic cohorts based on behavioral data and platform context

- Experiments are run in real time—everything from pricing to recommendations can be A/B tested on the fly

- The system tracks lift, conversion influence, and optimization metrics—then serves the most effective variant to future users

One line in Corrily’s recommendation engine documentation makes this clear:

“During a test, the multi‑armed bandit algorithms are automatically triggered to allocate more traffic to well‑performing variations while reducing traffic to underperforming ones.”

Source: Multi-Armed Bandit Vs. A/B Testing In SaaS Price Optimization

This isn’t just about showing the right price.

This is about showing the right review, the right quote, the right voice, the right sentiment, to the right user—at the right moment in their buyer journey.

Combine this with G2’s category rankings, software profiles, and user journeys—and suddenly you’re not just talking about review manipulation. You’re talking about real-time algorithmic buyer influence.

A few open questions:

- Could G2 run experiments to see which mix of competitor reviews, placement, or quote snippets best suppresses a rising vendor?

- Could they dynamically shift recommendation orders, badges, and quote highlights to optimize clickthrough for preferred partners?

- Could they use behavioral data to determine how aggressive or gentle their upsell positioning should be when a vendor logs in?

Yes. Technically, they could.

Corrily’s own documentation outlines this ability.

And there are zero disclosed guardrails preventing G2 from using this infrastructure to fine-tune the buyer journey in ways that favor paying vendors, penalize dissent, and rewire platform visibility itself.

It's an experimentation system built by a pricing startup—designed to quietly learn how to maximize response, convert emotion into action, and optimize platform power dynamics for whoever controls it.

Corrily built a system that learns how to persuade people.

Now G2 owns it.

The Two-Headed Monster

The combination of unSurvey's AI capabilities with Corrily's pricing optimization creates something far more dangerous than industrial-scale fake reviews.

Here's what G2 can now technically accomplish:

Step 1: Generate negative synthetic reviews for Software Company A using unSurvey's voice cloning technology.

Step 2: Offer Company A "premium review management services" and "enhanced visibility packages" at dynamically optimized prices based on how desperate they are to fix their reputation.

Step 3: Use Corrily's pricing optimization to determine exactly how much Company A will pay—personalizing G2's service pricing based on the vendor's pain level.

Step 4: When Company A pays, use unSurvey to generate positive synthetic reviews. When they don't pay, the negative synthetic reviews continue.

G2 controls the reviews.

G2 controls what vendors pay them for relief.

G2 can optimize their extraction from each vendor individually.

This is the most sophisticated vendor monetization play in history.

Even without the voice cloning/synthetic review capabilities, G2 still has the ability to dynamically adjust what price you pay for their services based on how desperate you are to remediate any issues you have from negative reviews.

Will they implement this technology like this? Who knows.

Can they? Absolutely.

Why This Matters More Than Simple Fake Reviews

This system creates financial coercion disguised as business intelligence:

- Generate synthetic problems for vendors

- Use sophisticated pricing algorithms to extract maximum payment for solutions

- Maintain plausible deniability through "personalized SaaS pricing optimization"

The AI narrative was perfect cover.

While everyone focused on the flashy conversational AI features, G2 quietly acquired the infrastructure to manufacture vendor problems AND optimize how much each vendor will pay to solve those problems.

If you want more investigations that the industry won't touch, subscribe to Burn It Down.

Because someone needs to keep watching.

The Voice Cloning Capabilities

While G2's press releases focused on unSurvey's ability to collect "richer, more authentic reviews," Praveen Maloo's LinkedIn posts revealed what the technology actually did.

And it was far more sinister than anyone realized.

In a post from August 2024, Praveen casually announced a feature that should have raised immediate red flags:

"At unSurvey (now part of G2), we believe simulations won't replace real-world insights but can complement it by filling crucial gaps... unSurvey (now part of G2) can now automatically simulate conversations based on contextually relevant personas."

Read that again:

...automatically simulate conversations based on personas.

In October 2024, Praveen announced an even more troubling capability on LinkedIn:

"At unSurvey (now part of G2), we just launched a feature that lets you bring your own voices to the platform — yes, even through instant voice cloning."

The post continued: "Want to add a personal touch to your conversations and interviews? Across languages? Now you can!"

At first glance, this might seem like a convenience feature. Let companies use familiar voices for their research interviews.

But combined with G2's massive database of user-generated reviews, the implications become terrifying.

Here's what G2 now possesses the technical capability to do:

Step 1: A user leaves a genuine review on G2's platform, including a voice testimonial about their experience with software X.

Step 2: G2's system, now powered by unSurvey's ElevenLabs integration, creates a voice clone from that testimonial.

Step 3: Using the synthetic persona generation technology, G2 can now create additional "reviews" in that same user's cloned voice, for software they've never used, expressing opinions they've never held.

Step 4: The fake reviews appear on the platform under the same verified user account, in the same authentic voice, with the same speaking patterns and mannerisms.

To be clear: There is no evidence G2 intends to deploy this capability. But the technical infrastructure now exists to do so, with no disclosed safeguards preventing such use.

THE FAKE PORTFOLIO

The voice cloning capabilities were just the beginning of what I discovered.

While investigating unSurvey's website, I found something that reframes this entire story from corporate incompetence to potential systematic fraud.

unSurvey's website features a "Voice of Brands" portfolio showcasing hundreds of Fortune 500 companies: Disney+, EA, Ford, BMW, McDonald's, Microsoft, Amazon, Netflix, and dozens more.

Each brand has a dedicated page with detailed "customer insights" - complete statistics, demographic breakdowns, and authentic-sounding customer testimonials.

Let's take a look at 3 random "Voice of Brands" pages.

Tell me if you notice anything:

Discord:

Activision:

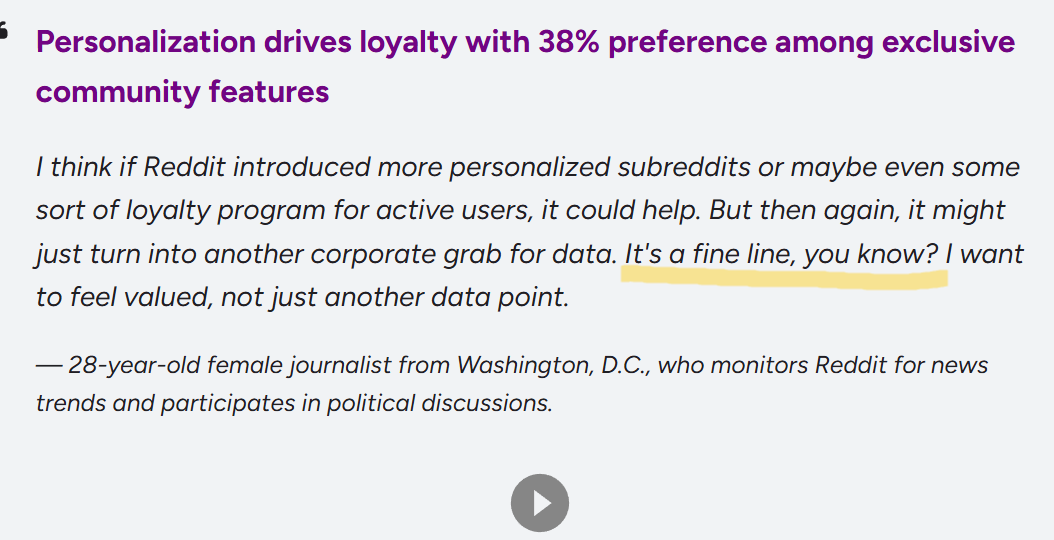

Reddit:

Notice the phrase I highlighted that appears in all three supposedly different customer testimonials?

So you're gonna tell me that a...

- 24-year-old unemployed gamer from Houston

- 36-year-old small business owner from Atlanta

- 28-year-old journalist from Washington D.C.

...all happen to end their testimonials with the exact same "you know?" verbal tic?

And the play button at the bottom?

You can hear an AI generated, synthetic voice (clearly generated by the ElevenLabs integration) recite the "testimonial."

But unSurvey's actual client base tells a different story.

Their homepage features just a handful of client logos: Wharton, a few small startups, and KPMG.

Yet their 'Voice of Brands' portfolio displays hundreds of Fortune 500 companies.

This reveals unSurvey's willingness to generate fake customer feedback for major brands, create synthetic testimonials using AI personas, and present completely fabricated research as authentic customer insights.

G2 didn't just acquire voice cloning technology, they acquired a platform already proven to manufacture fake customer feedback at industrial scale."

THE IMPLICATIONS

The acquisition of Corrily/unSurvey represents more than a single case of potential vendor fraud.

It's the moment the entire software review ecosystem crossed a point of no return.

When the world's largest software review platform acquires industrial-scale voice cloning and synthetic conversation generation technology, every review on every platform becomes suspect.

Not because G2 will necessarily use this technology maliciously, but because the mere technical capability to do so fundamentally breaks the trust mechanism that makes peer reviews valuable.

The Death of Authentic Feedback

It's well known that the internet has been home to "review bots" and all things "bot generated", but we're at the point where this is actually becoming a problem...

...where the "bots" are actually people.

Consider what happens next:

For Software Buyers: Every audio testimonial could be a cloned voice. Every detailed review might be AI-generated. Every case study could feature synthetic personas with manufactured experiences.

The due diligence process that B2B buyers depend on—reading peer reviews, listening to user testimonials, evaluating real-world feedback—becomes meaningless when that feedback can be manufactured using the authentic voices of real users.

For Software Vendors: Competitors can now generate negative reviews using cloned voices of your own satisfied customers.

Your genuine positive reviews become suspect because buyers know they could be artificially generated. You're forced to compete not on product quality, but on your ability to deploy or defend against sophisticated review manipulation technology.

For Review Platforms: The competitive advantage shifts from collecting authentic feedback to manufacturing convincing fake feedback.

Platforms that deploy AI generation technology can offer vendors better review management and more favorable coverage.

Platforms that maintain authenticity become disadvantaged against competitors willing to manipulate their review ecosystems.

THE STAKES

This isn't just about one acquisition or one platform.

The G2/unSurvey acquisition represents something even more troubling than possible fraud.

It reveals how technical illiteracy at the corporate level is driving massive capital misallocation in the AI boom.

When billion-dollar companies can't distinguish between proprietary technology and basic API integration, the entire venture ecosystem's ability to evaluate technical claims becomes suspect.

And when the incompetence enables the widespread deployment of voice cloning and synthetic conversation generation technology, that represents an existential threat to authentic human communication in digital contexts.

If people can't trust that online reviews represent real experiences, that customer testimonials feature actual customers, or that peer feedback comes from genuine users, the entire information ecosystem that supports complex purchasing decisions breaks down.

THE CHOICE

We're at a critical juncture where the technology to manufacture convincing fake human feedback exists at industrial scale, is owned by major platforms, and operates without regulatory oversight or industry standards.

The choice is clear: either the industry implements accountability mechanisms for synthetic content generation and technology misrepresentation, or we accept the systematic corruption of authentic human feedback as the price of AI innovation.

Regardless of intent, G2 has acquired the technical capability that could absolutely obliterate software reviews altogether.

Whether they implement safeguards against misuse, establish clear policies governing voice data, or allow the mere existence of these capabilities to undermine confidence in all platform feedback will determine the future of how software gets evaluated and purchased.

But one thing is certain: the age of trusting peer reviews is over.

What emerges next will define whether technology markets can function without authentic human feedback, or whether we'll need entirely new mechanisms for evaluating the tools that run our digital economy.

The G2/unSurvey acquisition is the flashpoint moment when AI technology actually has the potential to cross the line—from enhancing human communication to systematically replacing it with manufactured deception.

Every software buyer, vendor, and user of review platforms now lives with the consequences looming overhead...

...trusting the integrity of the entire software review industry to a billion dollar company that's incentivized to lie about it.

*G2 was contacted for comment but did not respond by time of publication.

Subscribing isn’t the only way to join the fight.

If you believe the industry needs more of this, don’t just read.

Fund it.

About the Author:

Clark Barron is the founder of Burn It Down—a rogue media platform built for marketers who know something’s wrong but don’t know who to trust anymore. He’s spent 15 years inside the machine and still consults with marketing teams at some of the biggest names in tech and cybersecurity. Now he writes the exposés no one else will touch—armed with nothing but proof, rage, and receipts.

His work is read by thousands of marketers, sellers, operators, and founders who are done pretending this shit is normal. Work with Clark